Goal 1: CAN COMPUTER GET IMAGE? - YES

YIPPEE!

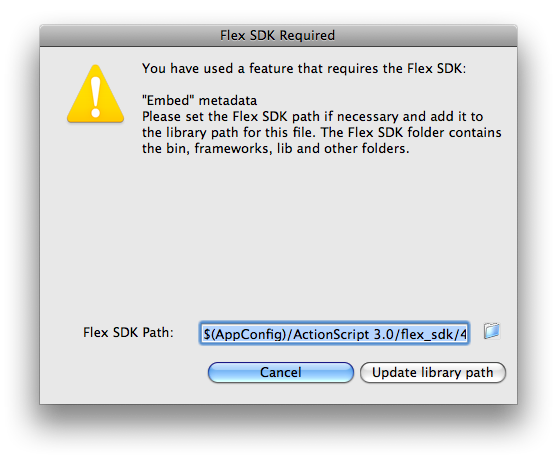

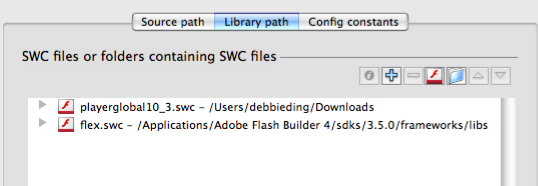

I followed the instructions on

this o'reilly page. I should like to add that there are many descriptions and tutorials out there but not all of them will work for you. It also depends on what you want to do and what you can work with (for me, I can only code in AS3). I was flapping about aimlessly for half a day downloading loads of random drivers and installing and uninstalling them constantly, but it did not get me anywhere. In the end, this informative and detailed

introduction to openkinect and as3kinect really sorted me out.

I am running Windows 7 via Parallels Desktop 7 on a Unibody Macbook Pro 2.53 Ghz Intel Core 2 Duo 4GB RAM.

- Uninstall all the other goddamned kinect related drivers you've previously installed. UNINSTALL EVERYTHING THAT IS CONFUSING.

- Download freenect_drivers.zip and unzip them. Install them ONE BY ONE in Device Manager - http://as3kinect.org/distribution/win/freenect_drivers.zip

- Download and install Microsoft Visual C++ 2010 Redistributable Package - "The Microsoft Visual C++ 2010 Redistributable Package installs runtime components of Visual C++ Libraries required to run applications developed with Visual C++ on a computer that does not have Visual C++ 2010 installed." - If you installed Visual 2010 Express you can skip this part. (OR PRETEND TO "REPAIR" THE INSTALLATION IF YOU ARE PARANOID! Makes no difference, it seems, except to calm one's RAGING PANIC)

- Download the as3 server bridge - http://as3kinect.org/distribution/win/freenect_win_as3server_0.9b.zip

Contents of folder as described in the original guide:

- as3-server.exe—This is the bridge to ActionScript.

- freenect_sync.dll—Part of the OpenKinect API. This is used to get the data when it can be processed instead of when it is available.

- glut32.dll—This is an OpenGL library dependency.

- pthreadVC2.dll—Unix multithreading library

- freenect.dll—This is the OpenKinect main library (driver)

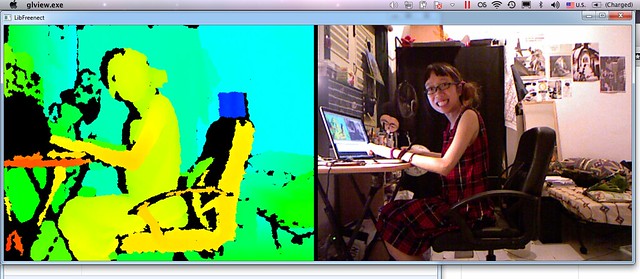

- glpclview.exe—Demo from OpenKinect (3D projection of depth and color).

- glview.exe—Demo from OpenKinect (2D projection of depth and color).

- libusb0.dll—USB library

- tiltdemo.exe—Demo from OpenKinect for controlling the Kinect's tilt position motor

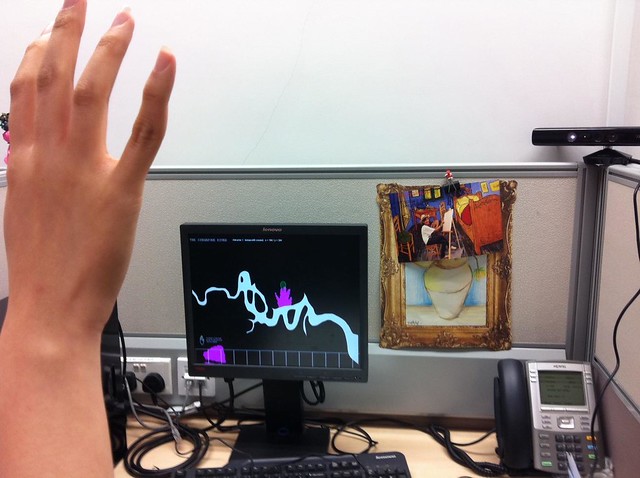

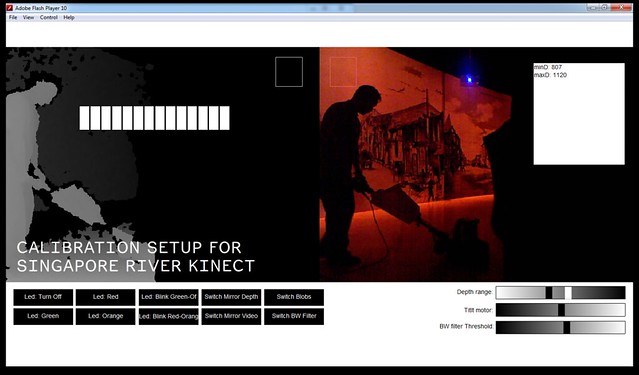

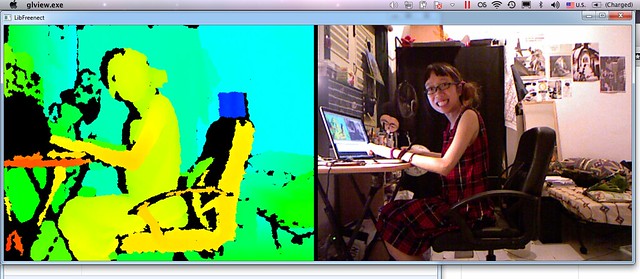

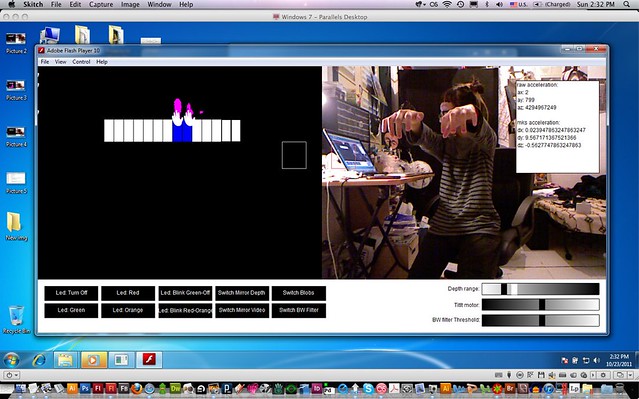

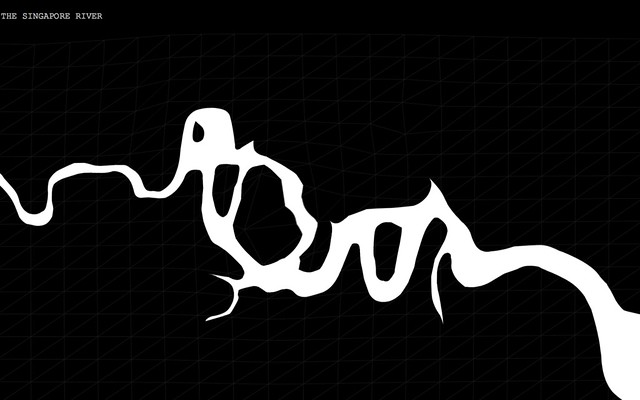

- Running glview.exe returns the above image.

Goal 2: CAN AS3SERVER SEND KINECT DATA TO AS3CLIENT? - YES

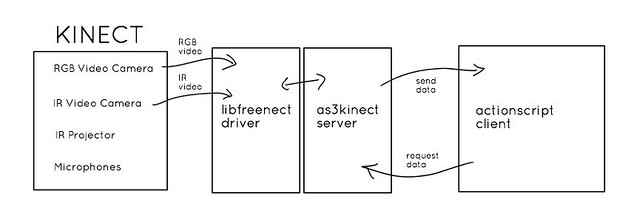

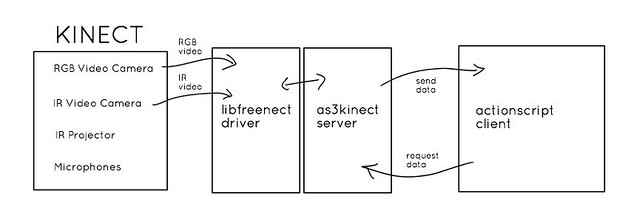

Because I needed to understand how this worked, I redrew the image explaining how the information gets from the kinect to my AS3 client. (although the original diagram was a lifesaver and i really appreciate it, i am also a spelling nazi so itchyfingers here had to correct it)

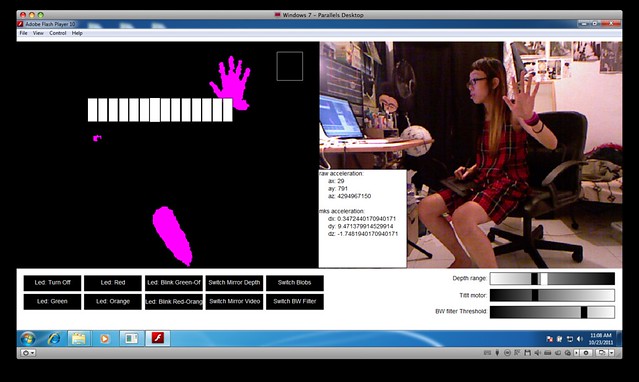

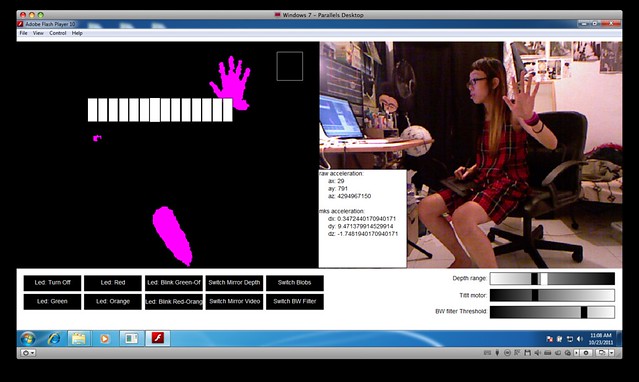

Test 1: Opening test.exe (flash executable) provided in freenect_demo_pkg_0.9b.zip

Test 1: Opening test.exe (flash executable) provided in freenect_demo_pkg_0.9b.zipSwitch on AS3server and then open test.exe

YES, Flash can receive video data. Kinect can receive messages from flash (changing LED colour, motor tilt, etc)

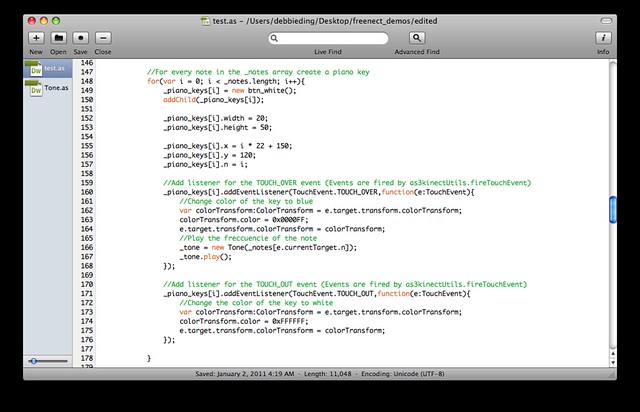

Test 2: Opening test.fla in Flash/Flash Builder to figure out how it worksIn the given text.fla, there is a piano. When you present a "finger" shape (depending on depth calibration), it will make a tone sound when it overlaps the "piano".

The green spot represents the blob that is detected. The blue rectangle represents a successful hit. On succesful hit, a tone plays, corresponding to the location of the "piano key" in the entire scale. In the example, the tone is produced in AS3 using

AS3 Sound Synthesis IV – Tone Class.

I DO NOT KNOW WHY IT THINKS THERE IS A BLOB AT THE TABLE. Is it because this is by depth? Should my "zone of interaction" just be a line instead? Why did i not think of sound as a response for interactions earlier (is it too late to get a speaker set up on site as well?)

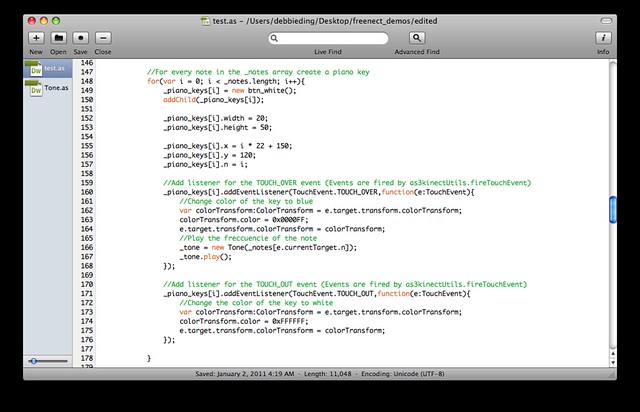

This is the portion of the code that refers to the piano function. It looks straightfoward and humanly understandable.

Crucially, it says: "Events are fired by as3kinectUtils.fireTouchEvent"

More information about the

as3kinectUtils class:

Openkinect AS3 API.

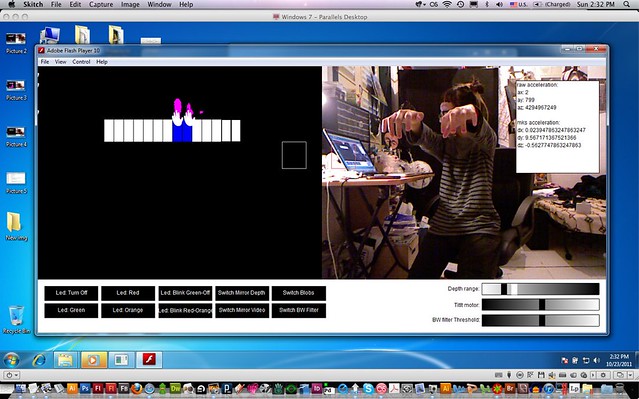

Side observation: latency becomes highly noticeable once i turn on blobs. I am not sure if parallels/insufficient ram is the reason for the latency during blob detection, although there appears to be no lag in normal rgb video. Will only know when i test it on a native Windows PC tomorrow.

At this point I am finally beginning to see limitations of OpenKinect as only OpenNI/NITE can do the skeleton detection (even though it could not do all the other things like change LED colour and stuff). Should I take risk and try with OpenNI wrapper now that I can finally see it sort of working with OpenKinect? Time is very short and I have to make it work within the next 24 hours... Yes my timeline is 24 hours. Oh decisions, decisions...

Goal 3: CAN AS3CLIENT RECEIVE BLOB DETECTION/TOUCHEVENTS ACCURATELY?

Using this simple blob detection, the key is in the DEPTH DETECTION. My fingers in this example have to be at the right depth. This means that it is basically like the blob detection in reactivision. Except that now i dont need a special screen or something because my depth detection serves as the invisible screen/line. I can increase the blob size in as3kinect.as where their default settings are defined under these vars:

public static const BLOB_MIN_WIDTH:uint = 15;

public static const BLOB_MAX_WIDTH:uint = 100;

public static const BLOB_MIN_HEIGHT:uint = 15;

public static const BLOB_MAX_HEIGHT:uint = 100;

Users would have to stand at a line and wave their hands in front of them. I think I am fine with that as an interaction.

This would also mean that I have to estimate the blob size. If i am going with blob sizes (estimated hand size as interaction), then I better make some controls to change size of blobs on the fly.

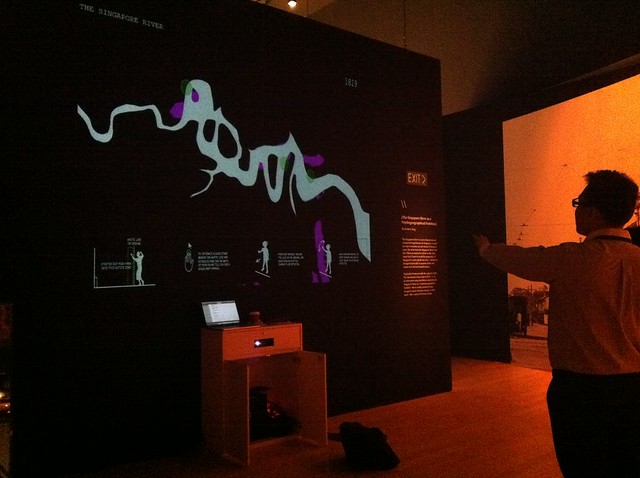

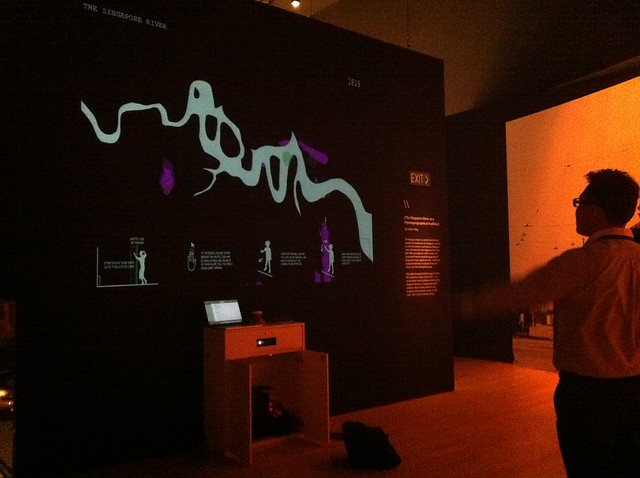

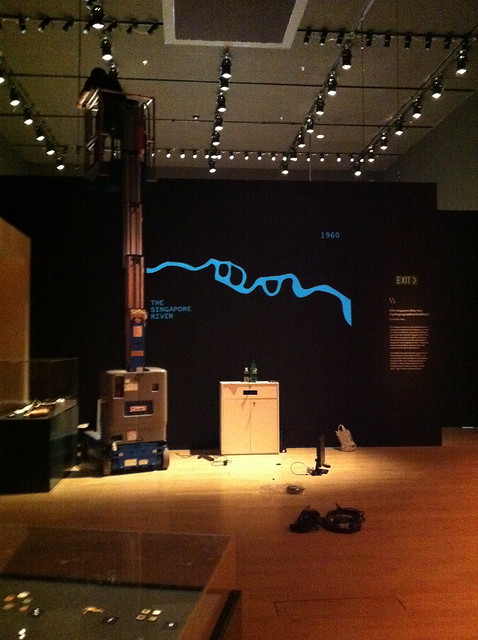

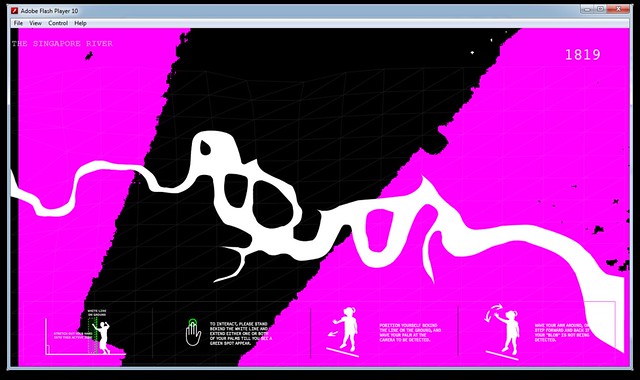

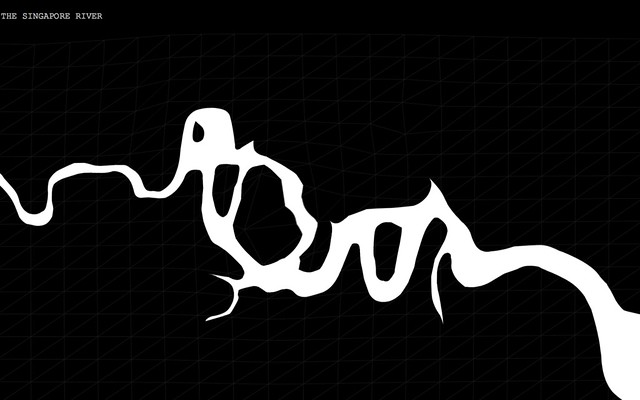

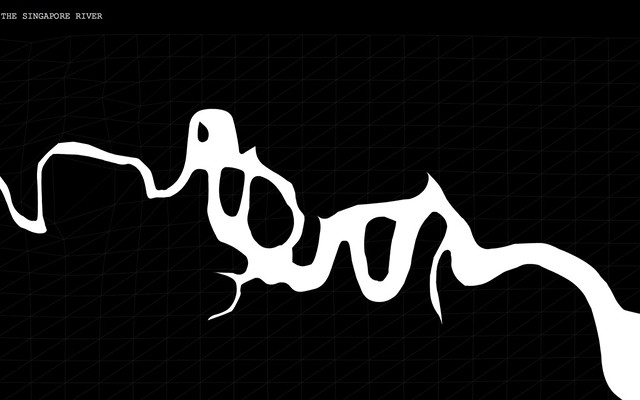

Goal 4: CAN AS3CLIENT USE THE BLOBS/TOUCHEVENTS AS EVENTS IN MY RIVER APP?

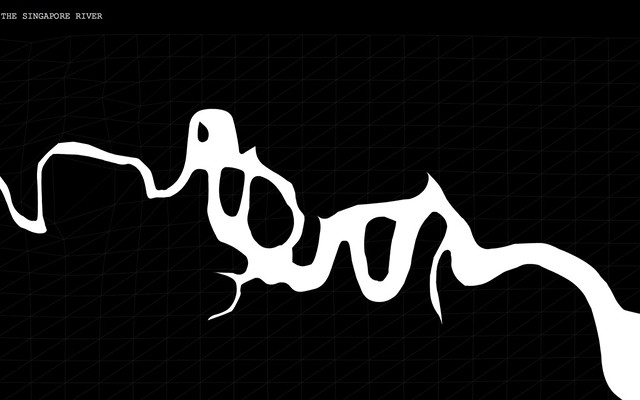

Currently, my original application was made with Flash Builder 4. It looks something like the image above. My aim by

wednesday afternoon is to do the following:

- integrate multiple TouchEvents from kinect into it

- trace/function for keyboard recalibration of size of hand blobs

- reduce lag/latency

More info on

flash.ui.Multitouch and other TouchEvents

My work entitled "\\" or "The Singapore River as a Psychogeographical Faultline II" will be at the Singapore Local Stories Gallery (at the end of the Titanic exhibition) at the Marina Bay Sands ArtScience Museum, from this Saturday (29th October 2011) onwards. Some artifacts from Pulau Saigon will also be shown there so please come to see them when you can.

To find out more about Pulau Saigon come down THIS THURSDAY EVENING, AT THE SUBSTATION (27th October 2011), when the Singapore Psychogeographical Society will be giving a lecture on psychogeoforensics.